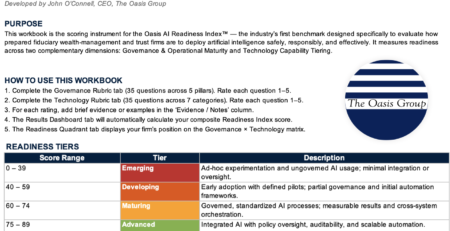

How Wealth Management Firms Should Engage With the Rise of Prediction Markets

Senior executives in wealth management are aware that younger investors are experimenting with new forms of speculation. They see more clients using sports-betting apps, crypto exchanges, and short-term trading tools. What many executives do not yet recognize is that a new market structure has emerged that sits between these activities. This structure allows investors to trade directly on the outcomes of real events. These platforms are known as prediction markets.

Prediction markets allow investors to trade event contracts tied to real world outcomes. These outcomes include elections, policy decisions, inflation releases, regulatory developments, and sports results. In the United States, the most prominent platform is Kalshi, which operates with CFTC oversight. CME Group and FanDuel have announced a regulated prediction market platform aimed at retail investors. PredictIt continues to operate in a restricted academic framework. Polymarket has restructured after regulatory actions. These examples demonstrate that event-driven trading is no longer fringe. It is becoming part of the financial landscape.

Event contracts appeal to younger investors because they offer a direct way to express conviction. Instead of buying an ETF and hoping for a Federal Reserve rate cut, an investor can trade on the probability of the rate cut itself. Instead of buying or avoiding a politically sensitive stock, an investor can trade on the likelihood that a certain candidate wins. These markets feel intuitive to a generation that consumes news and policy updates continuously.

Prediction markets will not replace traditional financial products. That is not their purpose. They do, however, influence client behavior in ways firms cannot ignore. They shape expectations about policy, economics, and political outcomes. They attract speculative capital. They appeal to clients who prefer simple, binary decisions rather than complex financial instruments. This reality affects how clients engage with their advisors and how they respond to market volatility.

Executives are beginning to see signs of prediction market activity in their client base. These signs include unexpected losses, unusually high risk taking, and sudden swings in investor sentiment that do not align with portfolio construction. Many of these behaviors originate in trading accounts outside the advisor’s view. To respond effectively, firms need a clear engagement strategy.

Understanding Why Engagement Matters

Firms must understand the significance of prediction markets before adopting a framework. There are three primary reasons.

The first reason is visibility. Advisors cannot form an accurate view of client risk if clients are making event-based bets that carry all-or-nothing outcomes. A client who loses money in an election market may become overly conservative. A client who wins several event contracts may become overly aggressive. Both behaviors affect planning and must be surfaced.

The second reason is credibility. Younger clients expect advisors to understand the tools they use. They want advisors who can explain event contracts clearly and calmly. Advisors who dismiss these platforms risk appearing out of touch. This weakens advisory relationships.

The third reason is insight. Prediction markets produce real time probabilities for events that influence investment strategy. These probabilities can support CIO commentary, client communication, and internal discussions about risk. Firms can use the data without supporting client trading.

With this context in place, the following framework provides a responsible path for engaging with prediction markets.

Stage One: Observation

The first step is simple observation. Firms do not need to endorse or offer prediction markets. They do, however, need to understand the information these markets generate. Event contracts provide market implied probabilities for outcomes that influence asset allocation and risk posture. These include inflation releases, rate decisions, elections, and regulatory developments.

Firms can reference these probabilities in market updates, advisor briefings, and CIO notes. This allows advisors to stay informed without driving clients toward speculative trading. It also helps investment committees compare internal forecasts with market expectations. Observation is low risk and immediately useful.

Stage Two: Advisory Preparedness

The second stage is preparing advisors to address prediction markets directly with clients. Firms should update their discovery process. Discovery forms should ask whether clients use sports-betting platforms, prediction markets, or crypto derivatives with event-like outcomes. These questions should sit alongside questions about options, margin, concentrated positions, and private investments.

Advisors should adopt a supportive and non-judgmental tone. Clients who feel judged will hide this behavior. Clients who feel understood will share information that materially affects their financial picture. Advisors should document this activity because it affects liquidity, risk tolerance, and long-term planning.

Stage Two ensures that advisors can speak credibly about prediction markets even if the firm does not support client participation.

Stage Three: Optional Integration

A small number of firms may eventually integrate prediction market insights into their investment process. Integration does not require enabling client trading. Instead, firms can use event probabilities to inform sector tilts, tactical positioning, or policy-sensitive portfolio adjustments.

Integration requires strong governance and should be approached cautiously. It offers competitive differentiation for firms that have the capability to integrate this investing into their overall financial planning assumptions and client conversations.

Governance and Compliance Considerations

Prediction markets carry real risks. Event contracts often behave like binary options with the possibility of total loss. User interfaces are designed for speed, simplicity, and engagement. This can encourage impulsive behavior. Regulators are still shaping the rules for what types of contracts are permitted. Firms must remain current.

Wealth management firms should create policies describing how advisors may discuss prediction markets. These policies should address documentation, suitability considerations, and escalation procedures for problematic behavior. Governance must treat prediction markets as a genuine part of the client’s financial life.

Final Perspective

Prediction markets are becoming a durable feature of the financial landscape. They align with how younger investors think, trade, and absorb information. Wealth management firms do not need to offer these products. They do need to understand them. A clear engagement framework will strengthen advisor credibility, improve risk assessment, and protect client outcomes. Firms that ignore this category will miss a growing component of investor behavior and risk losing relevance with the next generation.